Hi @paultaylor,

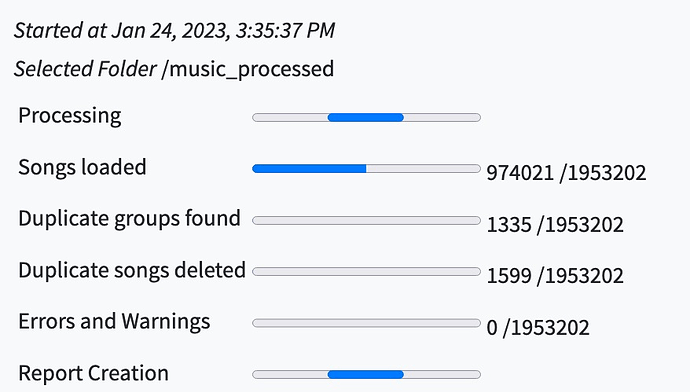

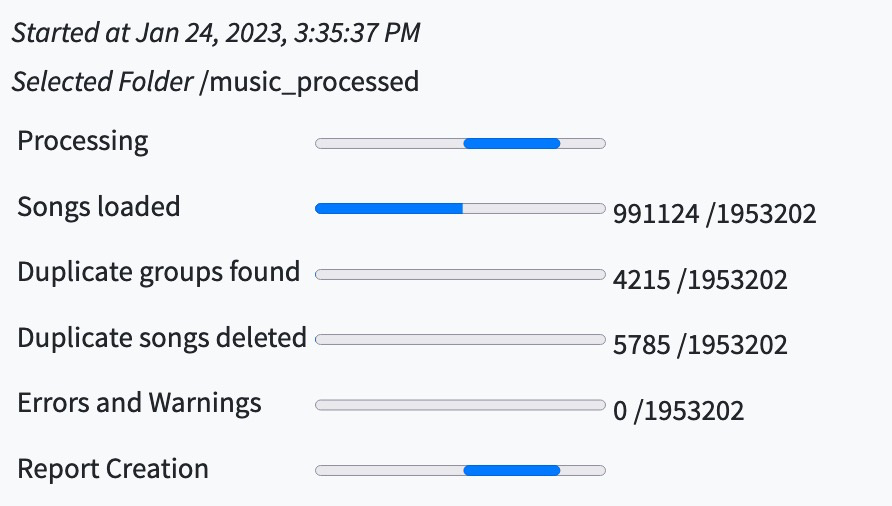

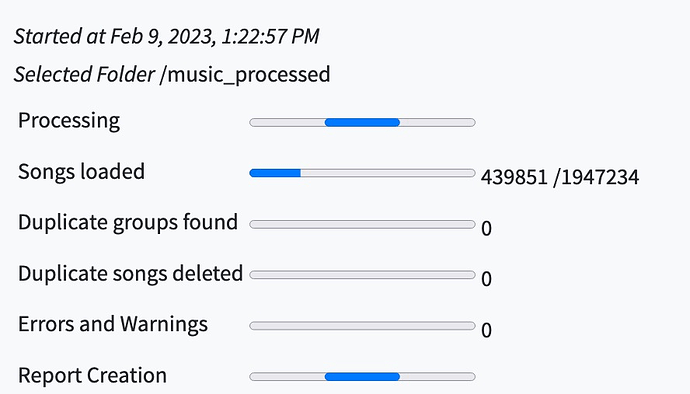

I have that job that was running on my /music_processed/ folder.

Selected Folder : /music_processed

Loaded 1134798 songs for duplicate checking

There are 146969 duplicate keys

219889 Duplicates found and deleted in 10 days 0 hours 13 minutes 6 seconds

After 10 days, and at mid-way, the job was reported as ended.

If I check the logs : tail -f songkong_debug0-0.log

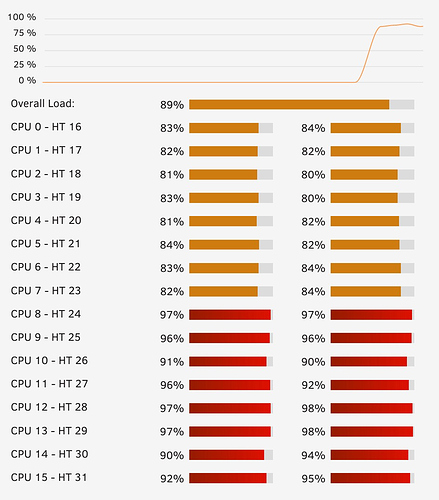

I can see the job actually is still running, and that it is currently processing the P letter.

Extract:

23/01/2023 18.37.26:CET:DeleteDuplicatesLoadFolderWorker:loadFiles:SEVERE: end:/music_processed/Prince and The New Power Generation/Gett Off:6:1160662

23/01/2023 18.37.26:CET:DeleteDuplicatesLoadFolderWorker:loadFiles:SEVERE: start:/music_processed/Prince and The New Power Generation/Money Don't Matter 2 Night:2:1160662

23/01/2023 18.37.39:CET:DeleteDuplicatesLoadFolderWorker:loadFiles:SEVERE: end:/music_processed/Prince and The New Power Generation/Money Don't Matter 2 Night:2:1160664

23/01/2023 18.37.39:CET:DeleteDuplicatesLoadFolderWorker:loadFiles:SEVERE: start:/music_processed/Prince and The New Power Generation/The Morning Papers:2:1160664

I’ve sent you the support files, of course.