Okay actually reviewing it I remembered something. There are two separate queues:

A limited size queue for most tasks that controls matching and once this queue is full no more songs are loaded, this has threads to match number of cpus, it also has a timeout that is triggered for any job that is taking too long to complete.

An unbounded queue for song saving. It has no timeout for jobs and is unbounded this is to ensure that if task is cancelled we cant interrupt any jobs as they are writing data to file, potentially corrupting the file. Song saving task does use cpu but the majority of the work done is I/O, hence we only provide two threads so that we dont get too much data contention writing multiple files to the disk .

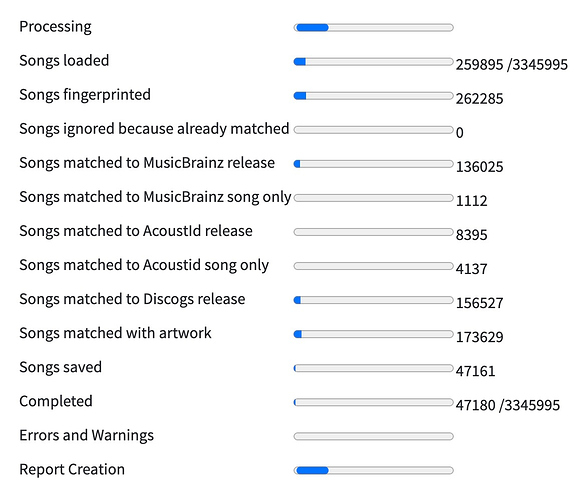

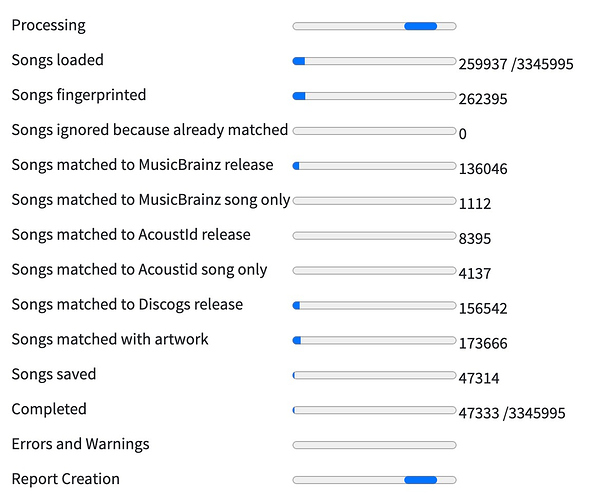

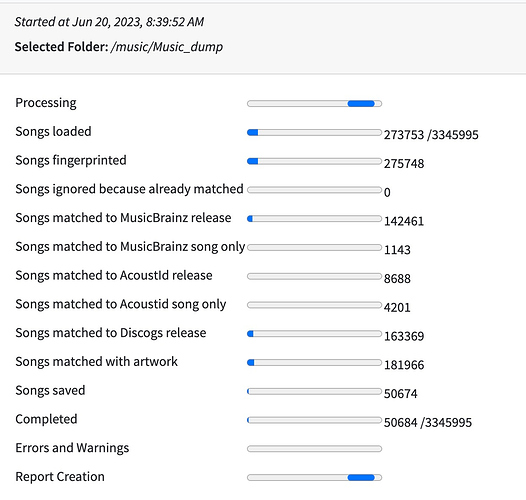

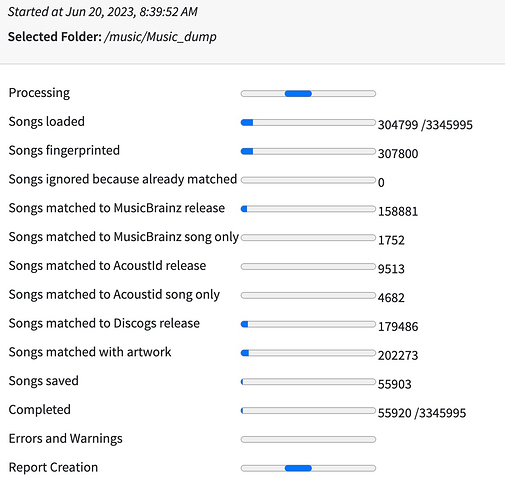

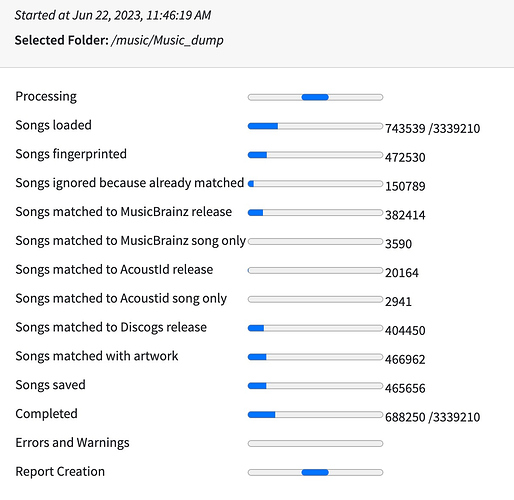

Once I had fixed the server, song saving went up but was still way behind song matching. So I dont know if this was because of issue with server or because there is a mismatch between the many threads used for song matching and only two threads for song saving.

This is summary from monitor logs

1/06/2023 09.14.54:CEST:MonitorExecutors:outputQueueSize:SEVERE: QueueSize:Worker:100

21/06/2023 09.14.54:CEST:MonitorExecutors:outputQueueSize:SEVERE: QueueSize:SongSaver:21800

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: DbConnectionsOpen:39

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: SongPreMatcherMatcher:0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: NaimMatcher:0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: AcoustidSubmitter:111718

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: StartMatcher:27356:27321:27321:260000

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzSongGroupMatcher1:27321:27321:27287:259804

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzUpdateSongOnly:0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzMetadataMatches:0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzSongGroupMatcher2(SubGroupAnalyser):630:630:630:10043

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzSongGroupMatcher3(WithoutDuplicates):182:182:182:1772

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzSongGroupMatcher4(AnalyserArtistFolder):0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: DiscogsMultiFolderSongGroupMatcher:14886:14821:14820:160768

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: DiscogsSongGroupMatcher:14886:14821:14820:160768

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: MusicBrainzSongMatcher:0:0:0:0

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: DiscogsSongMatcher:275:275:275:275

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: DiscogsUpdateSongGroup:2631:2631:2631:21343

21/06/2023 09.14.54:CEST:MonitorExecutors:outputPipelines:SEVERE: SongSaver:27784:5984:4410:257829

So we see at that point in time worker queue size is at maximum of 100 tasks , songsaver is 21,800 tasks

Then we list the different tasks

MusicBrainzSongGroupMatcher1:27321:27321:27287:259804

Means MusicBrainzSongGroupMatcher1 task queued 27,321 jobs, 27,321 were started, 27,287 completed (but this can include timeouts) and 259,804 music files were loaded into the task for processing, each job usually groups multiple related songs e.g an album

SongSaver:27784:5984:4410:257829

Whereas here, only 5984 of the 27784 have even started, but you said the disks were not busy so confusing.

Unfortunately, the part of monitoring that shows trace of each thread only shows the Worker threads not the SongSaver threads so I cannot see what the SongSaver threads were doing when this snapshot was taken. But I have fixed this for next version due next week.